Concepts of AI#

AI as a goal for humankind#

There are over 66 thousands species of vertebrates (suomeksi: selkärankainen) in the planet Earth. What makes the Homo sapiens (man the wise) different from each other is possibly the intelligence. The intelligence makes as superior to other species allowing us to control the world and use it’s resources for our own good. Because the importance of intelligence we have always been interested in understanding how we think, and what intelligence is.

Dr Yval Harari (PhD in history) discusses in his book [3], what is the next goal of humankind, after the fight for climate change is won. Harari’s quite radical opinion is that being a God-like, to be able to extend the capabilities of the human being, and create life and intelligence of it’s own have always been in the dreams of a human and after recent development, they can be considered as more serious goals today. This requires, in addition to understanding what intelligence is, the capability to implement intelligent artifacts.

We already extend human senses, memory and information processing capacity with technology, and a great deal of researchers continue striving for the development of artificial intelligence (AI) and artificial life (AL), like they have always did.

History#

Harari’s point can be at least partly proven by studying the ancient myths of AI and AL.

AI from myths to robots#

The creation of humanoid robots and intelligent structures has been dreamed by a human kind as long as there is information available.

Talos was a mythical giant artifact in Greek mythology. It was a human like bronze sculpture which was forget with the help of magical powers and animated by ethereal fluid called Ichor. When the Ichor was let out, the sculpture stop functioning and “died”. The mythical Talos-sculpture had super human like abilities and intelligence. The copying and improving a human being is still one of the goals of AI research. The Frankestein’s monster is the similar fictional story of the modern times, and humanoid robots are becoming reality today.

While Talos was animated by magical powers, the Frankenstein’s monster was a dead person, in which the life was brought using then recent scientific methods: alchemy, chemistry and electricity, and the humanoid robots are powered by neural networs and deep learning, which is our current technology for creating intelligence.

Scientific development of ingredients for AI#

More recent development of artificial intelligence is often based on formal reasoning, a mechanization of human inference. Formal reasoning was first developed by philosophers, like deductive reasoning through syllogisms by Aristotle (384-322 BCE), geometry by Euclid (300 BCE), algebra by al-Khwārizmī (813-833 CE) , and parsimonious reasoning by William of Ockham (1285-1347 CE) , for example.

Charles Babbage (1791-1871) created a theoretical concept of a digital programmable computer (Analytical engine) and Ada Lovelace (1815-1852) speculated that it could be used for composing music of any complexity. The ideas of hardware and algorithm (software) were created.

Computer science started in the beginning of 20th century still without computers by logician Kurt Gödel (1906-1978) who studie the limits of provability of logical theorems. His second incompleteness theorem for example states that a consistent system of axioms, whose theorems can be listed, cannot prove its own consistency. At the same time, Alan Turing (1912-1954) used formalized the concepts of algorithm and computation using an artificial logical machine, called as Turing Machine. Turing used his machine to break crypographically secured messages during World War II.

In 1950 the development of AI and computers developed further. The research had found out that the human brain consists of neurons and scientists created artificial neurons to perform simple logical functions and connected many of them as artificial neural networks (ANN) for more complex operations. The goal was to mimic the structure of human brains to be able to make intelligent decisions. First researchers started to study the processing of symbols in addition to numbers and the idea of AI that a computer can have a mind as humans do, emerged.

The research field of AI is said to be established in the Dartmouth Conference of 1956. After the conference the field of AI started to develop very rapidly and plenty of resources were used for it’s development.

Discussion point

In Blade Runner movie (1982), agent Deckard is hunting for replicants (AI robots), made by mighty Tyrell-corporation. Watch the scene Deckard Meets Rachel and consider, how did Deckard studied if Rachel is a replicant or a human? What is the connection with so called Turing test? Why it was so difficult for him to find out the truth in Rachel? The answer to the last question is in the following scene starting from 1:15 Deckard Meets Rachel, scene continues How is that related to self consciousness of AI?

Historical methods for AI#

Axiom based AI was dveloped before 1960s, but then it was noticed that a small number of axioms are weak in solving complex problems. It was insted acknowledged that it is important to include a rich base of knowledge in the the system. This development lead to so called Knowledge-Based Systems. [6]

Reasoning as search: Some problems can be solved by searching the space of potential actions step by step, until the solution is found.

Natural language processing (NLP): Since it’s early days, the natural communication with a human being has been one important goal of AI. I consists of understanding the written or spoken natural language, mapping the semantics of natural language concepts and synthesizing written or spoken texts. These goals have direct relation to Turing’s test. When Alan Turing was asked, if a computer can ever be as intelligent as a human being, he has been said to answer that at least there can be a seemingly intelligent machine which can communicate with a natural language mimicking human behavior so closely that it is indistinguishable from a human being by a human observer. Passing the Turing’s test has been one of a long term goal for natural language processing and AI.

AI in Micro worlds: It was realized that AI ideas can be more effectively implemented in a reduced world, when unnecessary details have been removed. A Micro World is an artificially simplistic description of reality, where sufficient AI models can be build. Plenty of research has focused on so-called blocks world, which consists of only coloured blocs of different shapes and sizes on a flat surface. These concepts influenced a lot in simple natural language processing systems and which were able to carry out conversations in simple stereotypical situations, without actually understanding the content of the conversation. Many machine vision system also model the world which simple geometrical shapes created from the images.

Some of these historical methods have been so fundamental that they are still in use. The good old perceptron is in the heart of modern deep learning methods and NLP is still an active research area. The idea of Micro Worlds is replaced with a discussion of weak and strong AI

Weak AI or narrow AI are AI implementations for a narrow task in a limited environment. For example, the Siri assistant can understand speech, search data and create virtually intelligent answers, but it is not intelligent in the same manner than human being. Most of the AI implementations today are examples of Weak AI.

Strong AI or General AI would be AI which actually is intelligent and can solve general problems in the same manner than human being. Sometimes self consciousness is also listed as a requirement for Strong AI.

Discussion point

What is self consciousness? How could an AI gain Self consciousness, by simply including itself into simulation? What would be the impacts of self conciousness?

Key drivers for development of AI#

Computing power: AI needs powerful processing of information which can be achieved by using fast computers. The fast development of computers has enabled the fast development of AI.

Availability of data: The AI is based on data. The digitalisation of the society has created plenty of embedded sensors and social media which produce a vast amount of data, which can be used for training AI to solve problems. It has been claimed that 90% of the data ever created was created during last two years.

Algorithms and tools: The development of AI algorithms and tools bring AI to be accessible to everyone and accelerate the development of AI itself.

Definition of AI#

Artificial Intelligence (AI) term has been taken in to use already in 1956 connected to the research on artitificial neural networks, but it is still somewhat hard to define. Here are some common definitions of it.

According to the wordbook definition, AI is present, when a computer or a computer controlled robot behaves like intelligent being.

Artificial intelligence (AI), the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings.

-Ensyclopædia Britannica

B. J. Copeland [11] seems to agree with this definition, and continues explaining that a human is one of those intelligent beings, which can be used as a measure of intelligence. He further continues that everything a human does is not necessarily a manifestation of intelligence, but reasoning, discovering a meaning, ability to generalize and learning from the past are truly intelligent behaviour.

Artificial intelligence (AI), the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings. The term is frequently applied to the project of developing systems endowed with the intellectual processes characteristic of humans, such as the ability to reason, discover meaning, generalize, or learn from past experience.

Wikipedia finds Machine Intelligence as a synonym for Artificial Intelligence and recognizes the intelligence of other beings as humans as well

In computer science, artificial intelligence (AI), sometimes called machine intelligence, is intelligence demonstrated by machines, in contrast to the natural intelligence displayed by humans and animals.

-Wikipedia

Russel et.al. [8] conclude that AI can be human kind of thinking and acting demonstrated by artifacts, but also simply rational thinking and acting. Rational thinking is decision making based on correct rules, “laws of thought”. The rational thinking framework can be based for example on logics, mathematics or statistics.

Definition

Thinking is using knowledge and information to make plans, interpret and model the world and constructively interact with and make predictions of the world.

Definition

AI is “human like” or simply rational thinking, decision making, and acting demonstrated by artifacts.

Discussion point

What is the measure of intelligence? What if we meet with an extraterrestial being or an AI which is more intelligent than us. How can we know that it is more intelligent than us?

Definitions of different forms of AI according to [8]

Human like thinking: Studying how humans think and using the same methods in AI. This approach was used in early AI development but many contemporary methods differ from human thinking.

Acting humanly: Computer systems trying to carry out human like conversations so that they are indistinguishable from a real person. Target ḿay be to complete Turing’s test. Humanoid robots try to mimic human behavior. Acting like human does not necessarily require machien to think like human.

Rational thinking: Making correct decisions based on formal rules.

Acting rationally: An artificial agent acts to achieve the best outcome or the best expected outcome in case of uncertainty.

Discussion point

Does the rationality criteria provide a definition of AI which is independent on human intelligence?

Intelligent agents#

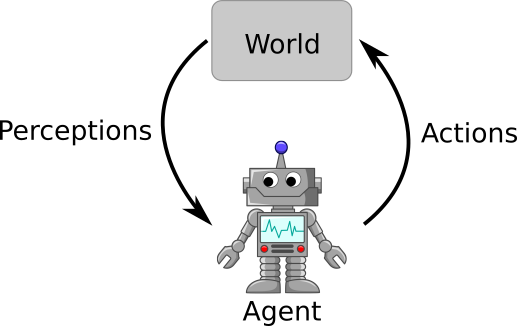

An intelligent agent is something which acts and tries to pursue goals. An artificial intelligent agent is a machine or software. It has often sensors, to perceive the world and it may make actions to interact with the world, see fig:agent

Definition

A rational agent is an agent which always selects an action that is expected to maximize its performance measure based on its perceptions and build in information about the world.

Discussion point

Can an intelligent agent be meaningful without perception or actuator or neither?

Discussion point

If two rational agents both maximize their performance measure based on their perceptions and build in information, can one be better than another?

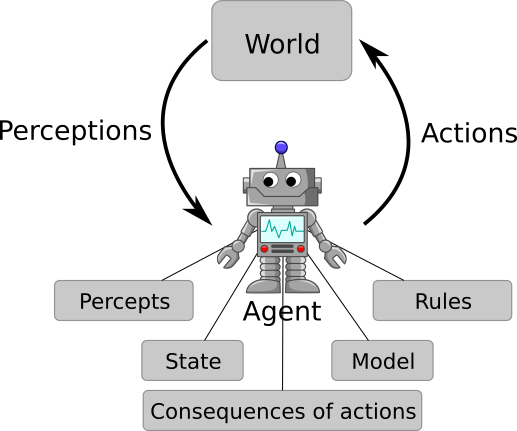

Intelligent agents can be divided in four classes based on their cognitive abilities and level of sophistication:

Simple reflex agents

Model based agents

Goal based agents

Utility based agents

Simple reflex agents#

These agents are reactive systems, which perform simple actions based on their perception and/or internal state. One example of really simple agent of this kind is a motion sensor based outdoor light controller. It’s operation can be described for example using the following simple rules:

The (1) describes a simple logical rule to combine two input variables to make a decision for taking an action. In general the decision system can be arbitrary complex and include also many other inference methods than logics.

Fig. 1 The simple reflex agent makes its decisions based only on the perceptions and decision rules.#

Because the agent acts based on its perceptions it will face into troubles if the world is only partially observable.

For example, an automated vehicle controlled by simple reflex agent could drive in the road and stay in the lane between white lines. It could perceive the world by a camera and decide if it is too close to the left side and decide to turn a steering wheel one step to the right, for example.

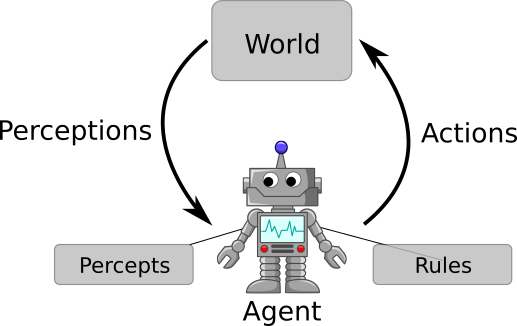

Model based reflex agents#

In addition to perception and actuators, the model based agent uses a model which describes some necessary behavior of the world. By using the model the agent “understands” something about how the world behaves, and can make predictions on how the actions changes the world. If the world is only partially observable, the agent can build a model which covers also the concealed information.

For example the collision avoidance system of an autonomous car can predict that even though the cars are still far away from each other, a collision is happening after a certain time, because the velocity of the car in front drives slower. The time to the collision can be estimated using physics:

In this case, the agent needs to perceive the distance between cars using a radar, for example and its own speed by GPS. It uses it’s own physical model to estimate the time for collision, and may decide to apply necessary breaking force by activating breaks. The agent would probably need some model of Newton mechanics to estimate the effect of the breaking force to the car speed.

An automated vehicle could have a model of the roads in the city and it can therefore turn from the current road to the named street from the right crossing.

Fig. 2 In addition to peceptions and decision rules, the model based agent has also a model and state of the world to allow it to make more complex decisions than the simple reflex agent.#

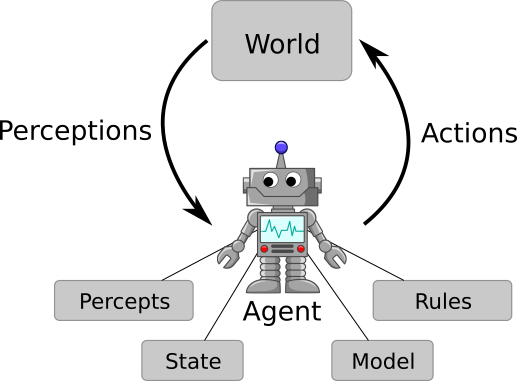

Goal based agents#

The goal based agents are not only reacting to the current situation but instead they plan to reach their goals. The planning usually demands a model which they can use for searching action sequences for reaching the goal. For example autonomous taxi can find a way to the target by searching it’s model (the map) and can carry out actions (steering) to reach the goal.

Fig. 3 The goal based agents can also assess the probable consequences of their actions and they are therefore capable of planning.#

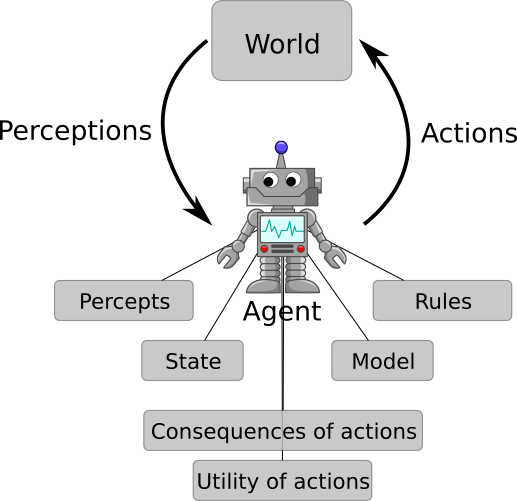

Utility based agents#

Utility, the fitness or worth for some purposes. An action has higher utility if it is more usefull or leads to smaller losses in the given situation. The goal of the optimization is to maximize the utility. Exactly symmetrical optimization goal is to minimize losses, since losses are negative utility and utility is negative loss. Utility based agents try to behave so that the utility gained by their actions is maximized. In other words, they try to reach their goal in an optimal way.

Fig. 4 The utility agents extend the goal and model based agents by also considering the utility what is gained by reaching the goal through different action sequences.#

For example, an automated vehicle controlled by a utility based agent would try to find an optimal path from the current location to the destination. The utility could be for example the negative of time spend or fuel consumed, or a combination of both. The agent may have many optional actions to take, and the utility agent selects the one which according to it’s knowledge or beliefs leads to higher utility.

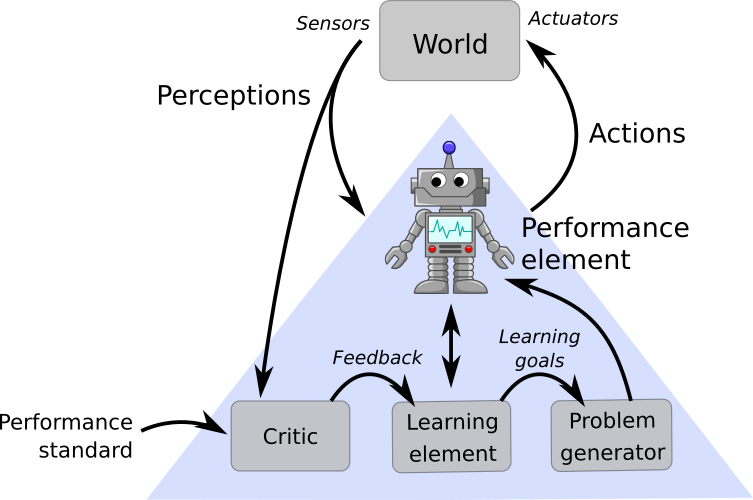

Learning agents#

Alan Turing estimated in 1950 the amount of programming needed to gain AI. According to methods of 50’s, he estimated that programming of AI which had similar capabilities than a person would take 50 years. He concludes that Some more expeditious method seems desirable, and proposes a learning machine [9].

In contrast to programmed AI, the learning AI learns the rules behind the actions without explicit programming. The benefits are that the agent can operate in an environment, which is initially unknown. In a long run it can also learn rules which would be very complex to program.

The structure of the learning agent is consisted of the following four components, see Fig. 5.

Performance element receives perceptions from the sensors and carries out actions by using actuators. This element includes the operations of the previously described agents, but here it also exhanges information with the learning element and receives suggestions from the problem generator.

Critic element observes the perceptions and uses the performance standard to assess how well the agent is performing and provides that information to the learning element.

Learning element is responsible on making improvements on the performance element according to the feedback received from the citic element.

Problem generator suggests actions leading to new and informative experiences to explore the world to find even better solutions in the long run.

Fig. 5 The learning agents extend the previous agents by adding a critic, learning and problem generator elements. The critic element provides feedback to the learning element, which updates the performance element. The learning element sets new learning goals to the problem generator, which suggest new exploratory actions to the performance element to explore unknown ares of the world.#

Discussion point

Can any agent act meaningfully if a world is not observable at all?

Discussion point

How would an autonomous car or vacuum cleaner would work, if it was simple reflex, model based goal based or utility based agent? How would learning change the behaviour?